Retinomorphic Vision Sensors

Our lab designs retina-inspired cameras that capture the visual scene and convert them into 'event' streams. Events can denote multiple meanings such as temporal contrast, spatial contrast etc. We consider novel event detection circuits and readout mechanisms such as GALS methodology to implement ultra-low-power cameras that can be used for a variety of applications such as drones, security surveillance, traffic monitoring etc. The qDVS imager uses query-driven Dynamic Vision Sensing (qDVS) approach to computational imaging that substantially improves on the achievable pixel density and energy efficiency of visual event coding.

The qDVS chip architecture combines complementary advantages of frame scanning active pixel sensors (APS) and event-driven DVS (eDVS) CMOS imagers. Compared to state-of-the-art eDVS Imagers, qDVS delivers upto 20x reduction in power and upto 2x increase in pixel density, achieving the best energy efficiency record to date of 6.3pJ/pixel-event.

We are currently exploring asynchronous frame-free stereo vision algorithms that can be implemented on low power hardware for low-latency real-time 3D reconstruction of the visual scene.

Corticomorphic Compute-In-Memory Architectures

Project 1: AI Hardware

An architecture implemented in a 130-nm CMOS/RRAM process, that delivers the highest reported energy-efficiency of 74 TMACS/W for RRAM-based Compute-In-Memory architectures while simultaneously offering dataflow reconfigurability to address the limitations of previous designs. The chip design features an array of 16x16 mixed-signal ultra-low power reconfigurable neurons with multiple modes, to implement various activation functions, including step, sigmoid and Rectified Linear Unit (ReLU), with binary or ternary output states. The reconfigurable energy-efficient design makes this architecture suitable for deep learning and neuromorphic applications like Convolutional Neural Networks (CNN) and general event-driven computing. A CNN architecture implemented on the chip using sigmoid and ReLU activation achieves prediction accuracy of 94.8% and 96.9% respectively.

Our work was published in Nature recently!

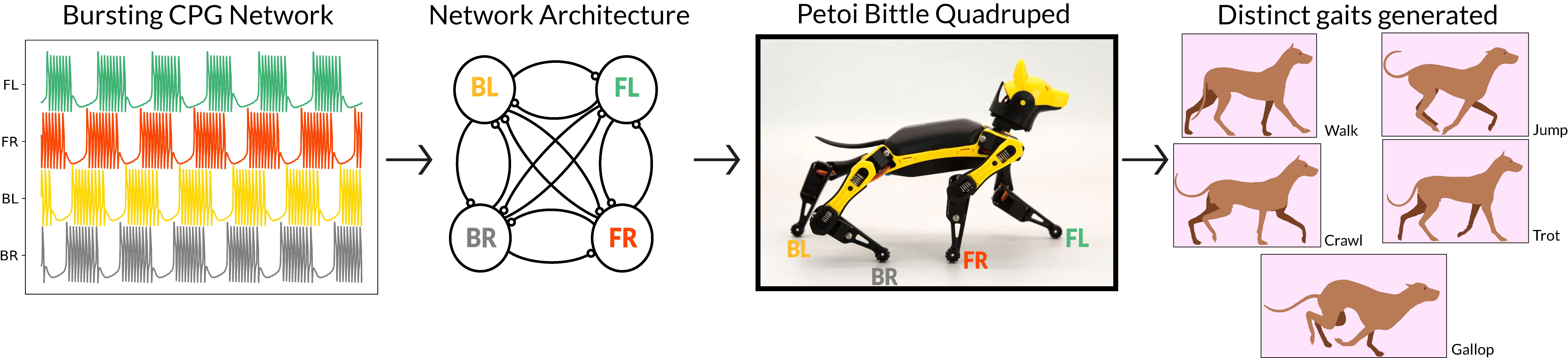

Bio-inspired Robotics

The project aims to design and implement bio-inspired robots using bio-realistic neuron model that can imitate the behavior of the actual neurons with utmost precision and eventually incorporate our model to have an entirely hardware-based spiking neural network (SNN). This interdisciplinary project involves neuroscience (neuron behavior), mathematics of non-linear dynamical systems, control theory (Mixed feedback over multiple timescales), circuit design (ASIC chip design), and device physics (engineering novel devices such as memristor). We have developed a software model that can be controlled to produce multiple neuron behavior (such as spiking and bursting) and a chip implemented based on TSMC 180nm technology which verifies the software model. Currently, we are investigating multiple approaches to build our devices, providing necessary non-linearity and working on building a common ground that enables us to compare various neuron models and their active regions.

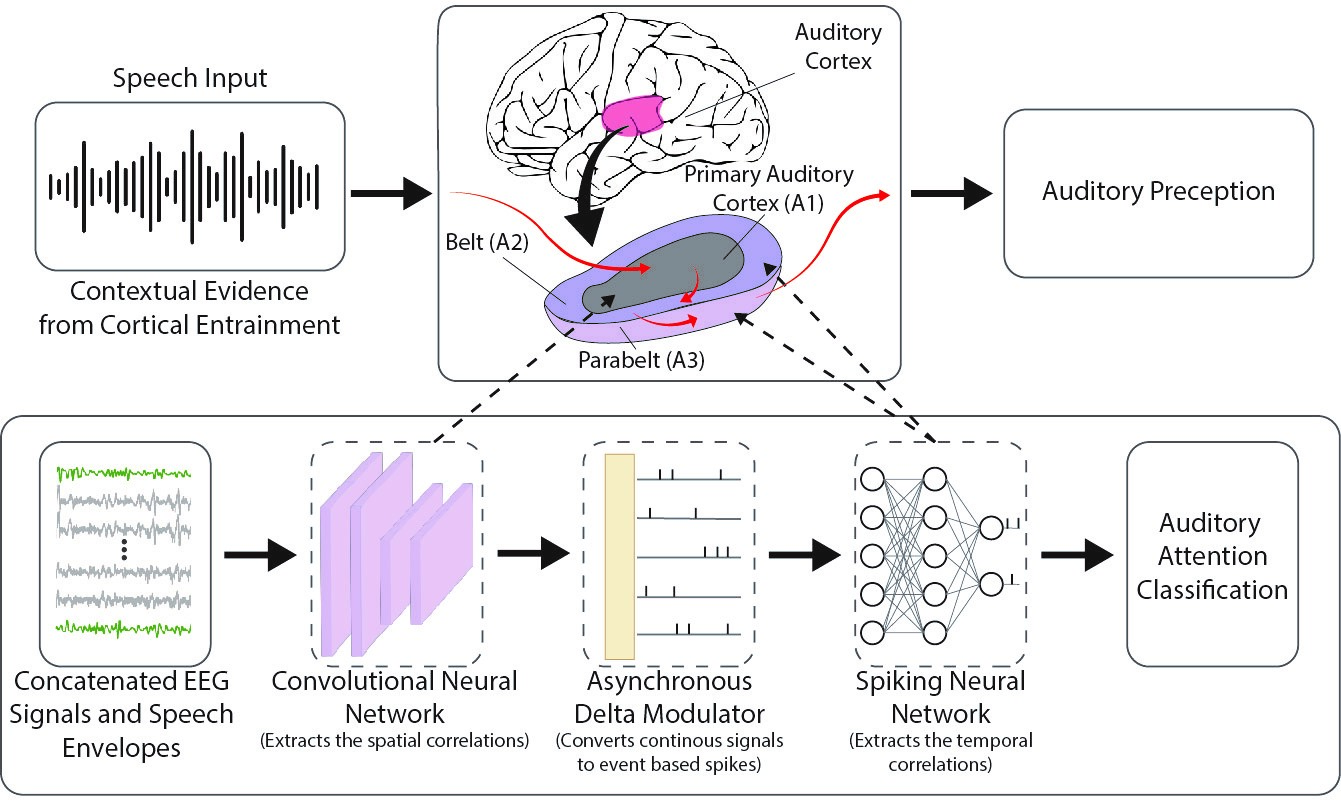

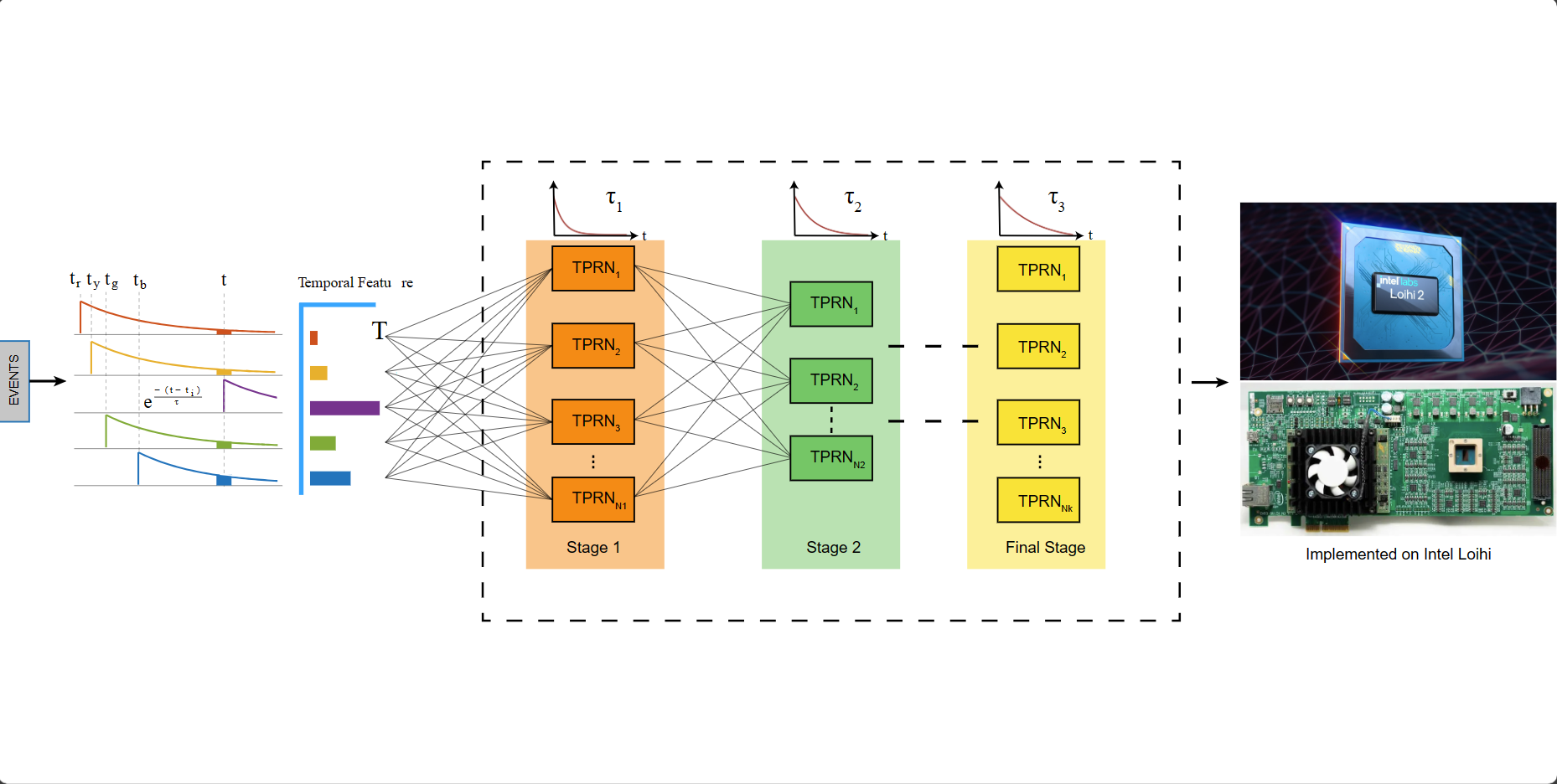

Event-based Algorithms for Learning and Inference

A learning rule called the inverted spike-time dependent plasticity (iSTDP) rule that can learn temporal patterns using only spike-timing information. Demonstrated keyword spotting using TIDIGITS database implemented on Intel Loihi with 11% error.

We are currently exploring how reward modulation using excitatory and inhibitory synapses, along with iSTDP can help implement robust temporal pattern recognition for vision, audio and motor control applications.

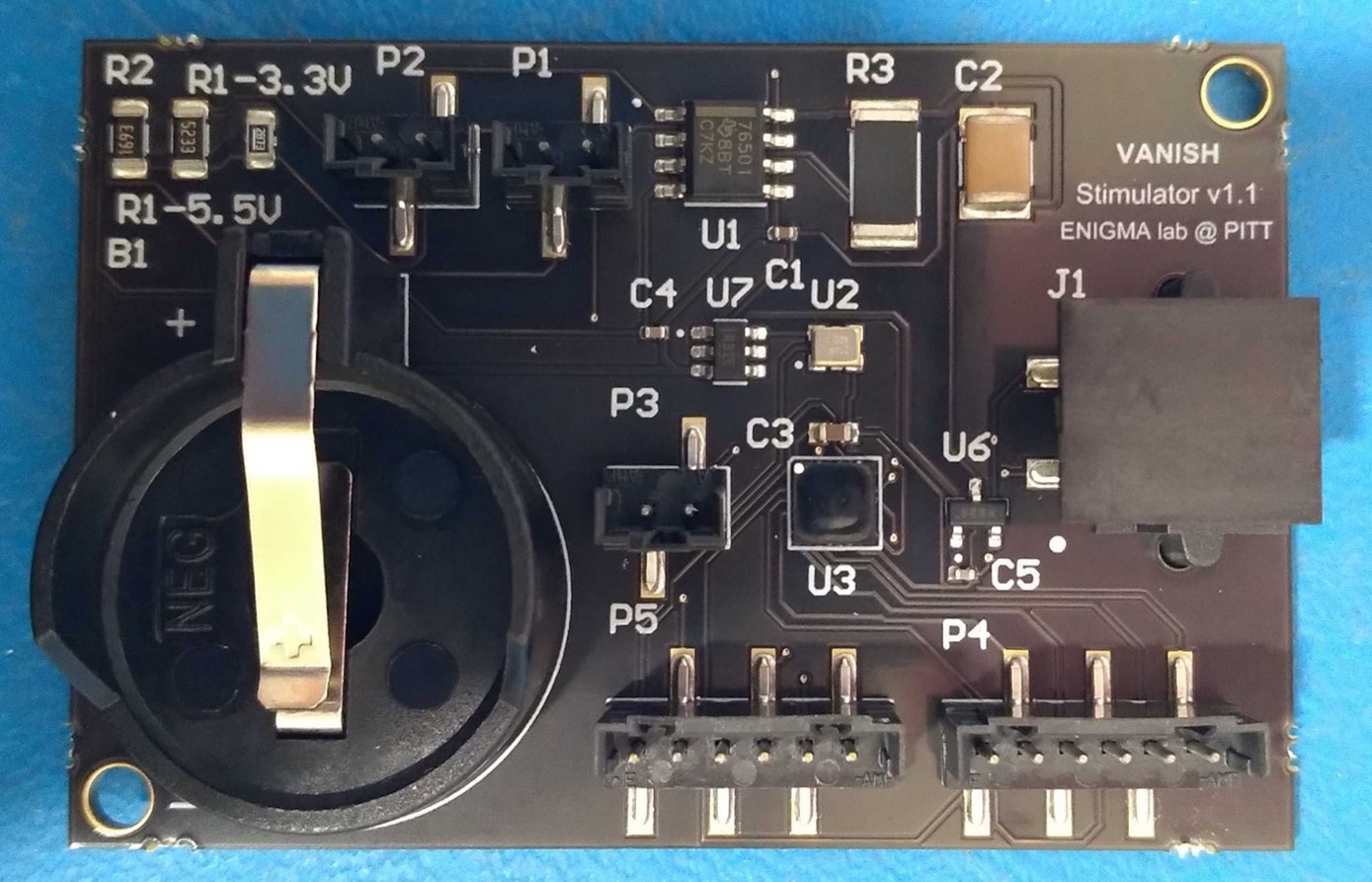

Biomedical Instrumentation

In this project, we designed and fabricated a compact Stimulator chip (ASIC) with multiple modes of operation - monophasic, biphasic current stimulation and long term electrode degeneration with adaptive charge balancing using a chip-in-the-loop feedback by actively measuring the tissue-electrode impedance.